机器学习容器模板

项目描述

mlt

机器学习容器模板

MLT:就像kubernetes的keras

- @mas-dse-greina

mlt帮助创建机器学习作业的容器。它通过简化容器和kubernetes对象模板的使用来实现。

我们发现共享各种机器学习框架的项目模板非常有用。一些具有来自Kubernetes操作器的原生支持,如mxnet和TensorFlow。其他没有,但仍然有在Kubernetes集群上运行的最佳实践。

除了在项目开始时获取样板代码外,最佳实践可能会随着时间的推移而变化。mlt允许现有项目适应这些变化,而无需重置并从头开始。

mlt解决应用程序开发的另一个方面:迭代容器创建。存储和容器创建应该很快 - 为什么不自动重建容器呢?mlt有一个--watch选项,它可以让您拥有类似IDE的体验。当检测到更改时,计时器开始并触发容器重建。在这个步骤中可以运行lint和单元测试,作为代码是否能在集群中运行的早期指标。当容器构建完成后,它会被推送到集群容器注册库。从这里开始,通过mlt deploy快速重新部署Kubernetes对象。

构建

先决条件

- Python (2.7或更高版本) 开发环境。这通常包括

build-essential、libssl-dev、libffi-dev和python-dev - Docker

- kubectl

- git

- python

- pip

可选的先决条件

- kubetail(用于

mlt logs命令) - Ksync(用于

mlt sync命令) - ks(需要

0.11.0版本以在horovod模板中支持卷) - TFJob操作员(用于

分布式TensorFlow模板) - PyTorch操作员(用于

pytorch-distributed模板) - jq(用于

tensorboard模板) - openssh-server(用于

horovod模板)

基于Linux Debian的发行版用户

- 运行

make debian_prereq_install以安装mlt工作所需的所有依赖项。我们假设用户已经设置了kubectl和docker。

安装

从PyPI安装

$ pip install mlt

从源安装

$ git clone git@github.com:IntelAI/mlt.git

Cloning into 'mlt'...

remote: Counting objects: 1981, done.

remote: Compressing objects: 100% (202/202), done.

remote: Total 1981 (delta 202), reused 280 (delta 121), pack-reused 1599

Receiving objects: 100% (1981/1981), 438.10 KiB | 6.54 MiB/s, done.

Resolving deltas: 100% (1078/1078), done.

$ cd mlt

$ pip install .

创建本地Python发行版

$ make dist

$ cd dist

$ ls mlt*

mlt-0.1.0a1+12.gf49c412.dirty-py2.py3-none-any.whl

使用摘要

mlt部署示例

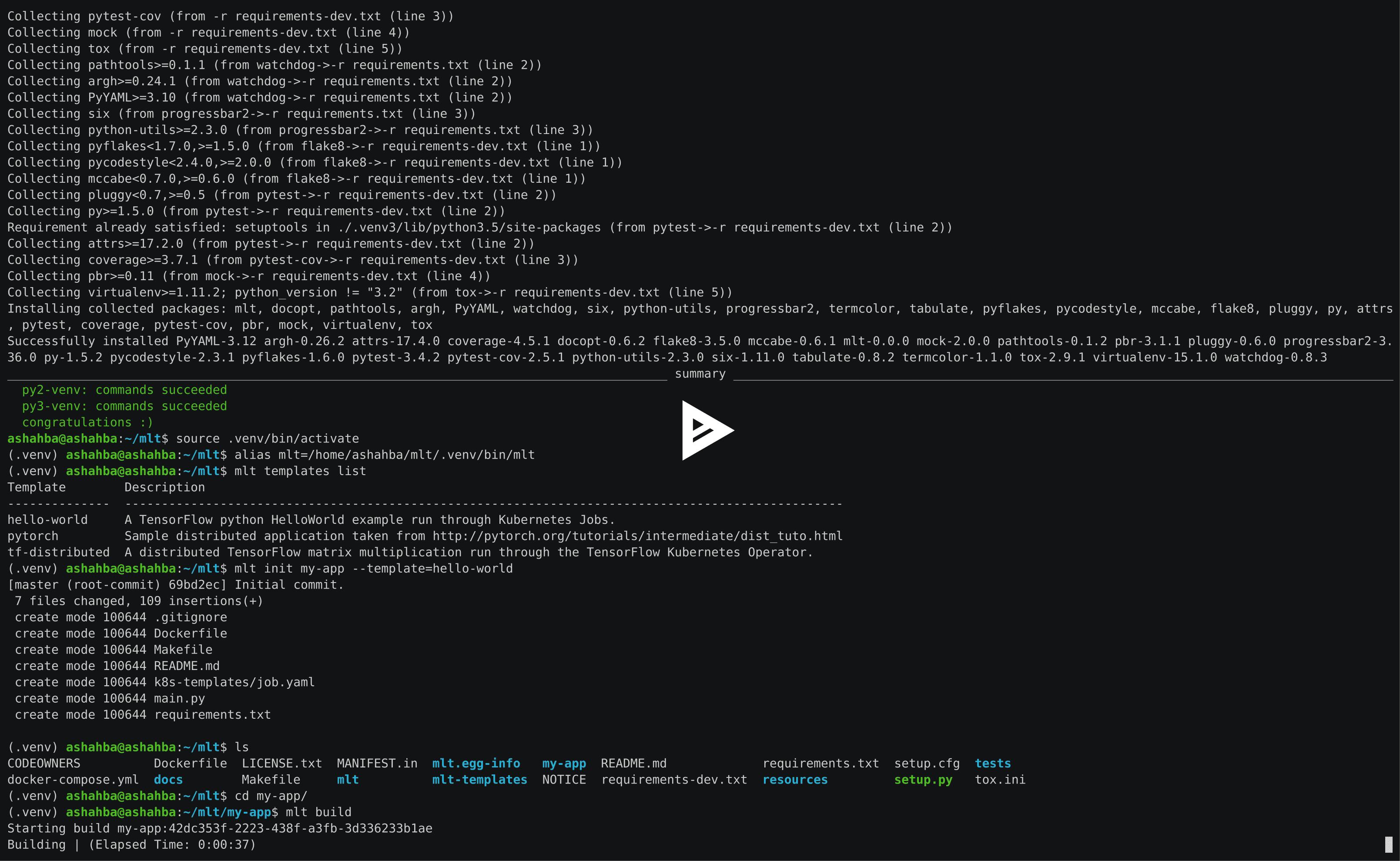

$ mlt template list

Template Description

------------------- --------------------------------------------------------------------------------------------------

hello-world A TensorFlow python HelloWorld example run through Kubernetes Jobs.

pytorch Sample distributed application taken from https://pytorch.ac.cn/tutorials/intermediate/dist_tuto.html

pytorch-distributed A distributed PyTorch MNIST example run using the pytorch-operator.

tf-dist-mnist A distributed TensorFlow MNIST model which designates worker 0 as the chief.

tf-distributed A distributed TensorFlow matrix multiplication run through the TensorFlow Kubernetes Operator.

$ mlt init my-app --template=hello-world

[master (root-commit) 40239a2] Initial commit.

7 files changed, 191 insertions(+)

create mode 100644 mlt.json

create mode 100644 Dockerfile

create mode 100644 Makefile

create mode 100644 k8s-templates/tfjob.yaml

create mode 100644 k8s/README.md

create mode 100644 main.py

create mode 100644 requirements.txt

$ cd my-app

# NOTE: `mlt config` has been renamed to `mlt template_config` to avoid confusion regarding developer config settings.

# List template-specific config parameters

$ mlt template_config list

Parameter Name Value

---------------------------- ----------------------

registry my-project-12345

namespace my-app

name my-app

template_parameters.greeting Hello

# Update the greeting parameter

$ mlt template_config set template_parameters.greeting Hi

# Check the template_config list to see the updated parameter value

$ mlt template_config list

Parameter Name Value

---------------------------- ----------------------

registry constant-cubist-173123

namespace dmsuehir

name dmsuehir

template_parameters.greeting Hi

$ mlt build

Starting build my-app:71fb176d-28a9-46c2-ab51-fe3d4a88b02c

Building |######################################################| (ETA: 0:00:00)

Pushing |######################################################| (ETA: 0:00:00)

Built and pushed to gcr.io/my-project-12345/my-app:71fb176d-28a9-46c2-ab51-fe3d4a88b02c

$ mlt deploy

Deploying gcr.io/my-project-12345/my-app:71fb176d-28a9-46c2-ab51-fe3d4a88b02c

Inspect created objects by running:

$ kubectl get --namespace=my-app all

### Provide -l flag to tail logs immediately after deploying.

$ mlt deploy --no-push -l

Skipping image push

Deploying gcr.io/my-project-12345/my-app:b9f124d2-ef34-4d66-b137-b8a6026bf782

Inspect created objects by running:

$ kubectl get --namespace=my-app all

Checking for pod(s) readiness

Will tail 1 logs...

my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg

[my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg] 2018-05-17 22:28:34.578791: I tensorflow/core/platform/cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 AVX512F FMA

[my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg] b'Hello, TensorFlow!'

$ mlt status

NAME READY STATUS RESTARTS AGE IP NODE

my-app-897cb68f-e91f-42a0-968e-3e8073334450-vvpqj 1/1 Running 0 14s 10.23.45.67 gke-my-cluster-highmem-8-skylake-1

### To deploy in interactive mode (using no-push as an example)

### NOTE: only basic functionality is supported at this time. Only one container and one pod in a deployment for now.

#### If more than one container in a deployment, we'll pick the first one we find and deploy that.

$ mlt deploy -i --no-push

Skipping image push

Deploying localhost:5000/test:d6c9c06b-2b64-4038-a6a9-434bf90d6acc

$ mlt logs

Checking for pod(s) readiness

Will tail 1 logs...

my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg

[my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg] 2018-05-17 22:28:34.578791: I tensorflow/core/platform/cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 AVX512F FMA

[my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg] b'Hello, TensorFlow!'

Inspect created objects by running:

$ kubectl get --namespace=my-app all

Connecting to pod...

root@test-9e035719-1d8b-4e0c-adcb-f706429ffeac-wl42v:/src/app# ls

Dockerfile Makefile README.md k8s k8s-templates main.py mlt.json requirements.txt

# Displays events for the current job

$ mlt events

LAST SEEN FIRST SEEN COUNT NAME KIND SUBOBJECT TYPE REASON SOURCE MESSAGE

6m 6m 1 my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg.152f8f13466696b4 Pod Normal Scheduled default-scheduler Successfully assigned my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg to gke-dls-us-n1-highmem-8-skylake-82af83b4-8nvh

6m 6m 1 my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg.152f8f134ff373d7 Pod Normal SuccessfulMountVolume kubelet, gke-dls-us-n1-highmem-8-skylake-82af83b4-8nvh MountVolume.SetUp succeeded for volume "default-token-grq2c"

6m 6m 1 my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg.152f8f1399b33ba0 Pod spec.containers{my-app} Normal Pulled kubelet, gke-dls-us-n1-highmem-8-skylake-82af83b4-8nvh Container image "gcr.io/my-project-12345/my-app:b9f124d2-ef34-4d66-b137-b8a6026bf782" already present on machine

6m 6m 1 my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg.152f8f139dec0dc3 Pod spec.containers{my-app} Normal Created kubelet, gke-dls-us-n1-highmem-8-skylake-82af83b4-8nvh Created container

6m 6m 1 my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg.152f8f13a2ea0ff6 Pod spec.containers{my-app} Normal Started kubelet, gke-dls-us-n1-highmem-8-skylake-82af83b4-8nvh Started container

6m 6m 1 my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33.152f8f13461279e4 Job Normal SuccessfulCreate job-controller Created pod: my-app-09aa35f4-bdf8-4da8-8400-8728bf7afa33-sqzqg

示例

模板开发

要添加新模板,请参阅模板开发者手册。

项目详情

下载文件

下载适合您平台的文件。如果您不确定选择哪个,请了解更多关于安装包的信息。

源分布

此版本没有可用的源分布文件。请参阅生成分布存档的教程。

构建分布

mlt-0.3.0-py2.py3-none-any.whl (126.7 KB 查看散列)