AllenNLP CoReference Resolution的多语言方法,包括spaCy的包装器。

项目描述

跨语言共指引用

共指引用很神奇,但训练模型所需的数据非常稀缺。在我们的情况下,非英语语言的可用训练数据也证明标注不佳。因此,跨语言共指引用假设使用英语数据和跨语言嵌入训练的模型应该适用于具有类似句子结构的语言。

安装

pip install crosslingual-coreference

快速入门

from crosslingual_coreference import Predictor

text = (

"Do not forget about Momofuku Ando! He created instant noodles in Osaka. At"

" that location, Nissin was founded. Many students survived by eating these"

" noodles, but they don't even know him."

)

# choose minilm for speed/memory and info_xlm for accuracy

predictor = Predictor(

language="en_core_web_sm", device=-1, model_name="minilm"

)

print(predictor.predict(text)["resolved_text"])

print(predictor.pipe([text])[0]["resolved_text"])

# Note you can also get 'cluster_heads' and 'clusters'

# Output

#

# Do not forget about Momofuku Ando!

# Momofuku Ando created instant noodles in Osaka.

# At Osaka, Nissin was founded.

# Many students survived by eating instant noodles,

# but Many students don't even know Momofuku Ando.

模型

截至目前,有四个模型可用:“spanbert”、“info_xlm”、“xlm_roberta”、“minilm”,它们在OntoNotes Release 5.0英语数据上的分数分别为83、77、74和74。

- “minilm”模型在多语言和英语文本中都是最佳的质量与速度权衡。

- “info_xlm”模型对多语言文本产生最佳质量。

- AllenNLP的“spanbert”模型对英语文本产生最佳质量。

通过分块/分批处理解决内存内存不足错误

from crosslingual_coreference import Predictor

predictor = Predictor(

language="en_core_web_sm",

device=0,

model_name="minilm",

chunk_size=2500,

chunk_overlap=2,

)

使用spaCy管道

import spacy

text = (

"Do not forget about Momofuku Ando! He created instant noodles in Osaka. At"

" that location, Nissin was founded. Many students survived by eating these"

" noodles, but they don't even know him."

)

nlp = spacy.load("en_core_web_sm")

nlp.add_pipe(

"xx_coref", config={"chunk_size": 2500, "chunk_overlap": 2, "device": 0}

)

doc = nlp(text)

print(doc._.coref_clusters)

# Output

#

# [[[4, 5], [7, 7], [27, 27], [36, 36]],

# [[12, 12], [15, 16]],

# [[9, 10], [27, 28]],

# [[22, 23], [31, 31]]]

print(doc._.resolved_text)

# Output

#

# Do not forget about Momofuku Ando!

# Momofuku Ando created instant noodles in Osaka.

# At Osaka, Nissin was founded.

# Many students survived by eating instant noodles,

# but Many students don't even know Momofuku Ando.

print(doc._.cluster_heads)

# Output

#

# {Momofuku Ando: [5, 6],

# instant noodles: [11, 12],

# Osaka: [14, 14],

# Nissin: [21, 21],

# Many students: [26, 27]}

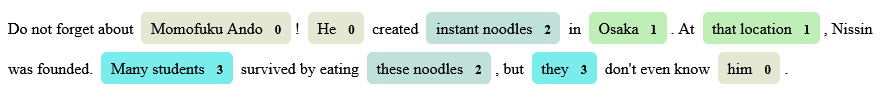

可视化spaCy管道

此功能仅适用于spaCy >= 3.3。

import spacy

from spacy.tokens import Span

from spacy import displacy

text = (

"Do not forget about Momofuku Ando! He created instant noodles in Osaka. At"

" that location, Nissin was founded. Many students survived by eating these"

" noodles, but they don't even know him."

)

nlp = spacy.load("nl_core_news_sm")

nlp.add_pipe("xx_coref", config={"model_name": "minilm"})

doc = nlp(text)

spans = []

for idx, cluster in enumerate(doc._.coref_clusters):

for span in cluster:

spans.append(

Span(doc, span[0], span[1]+1, str(idx).upper())

)

doc.spans["custom"] = spans

displacy.render(doc, style="span", options={"spans_key": "custom"})

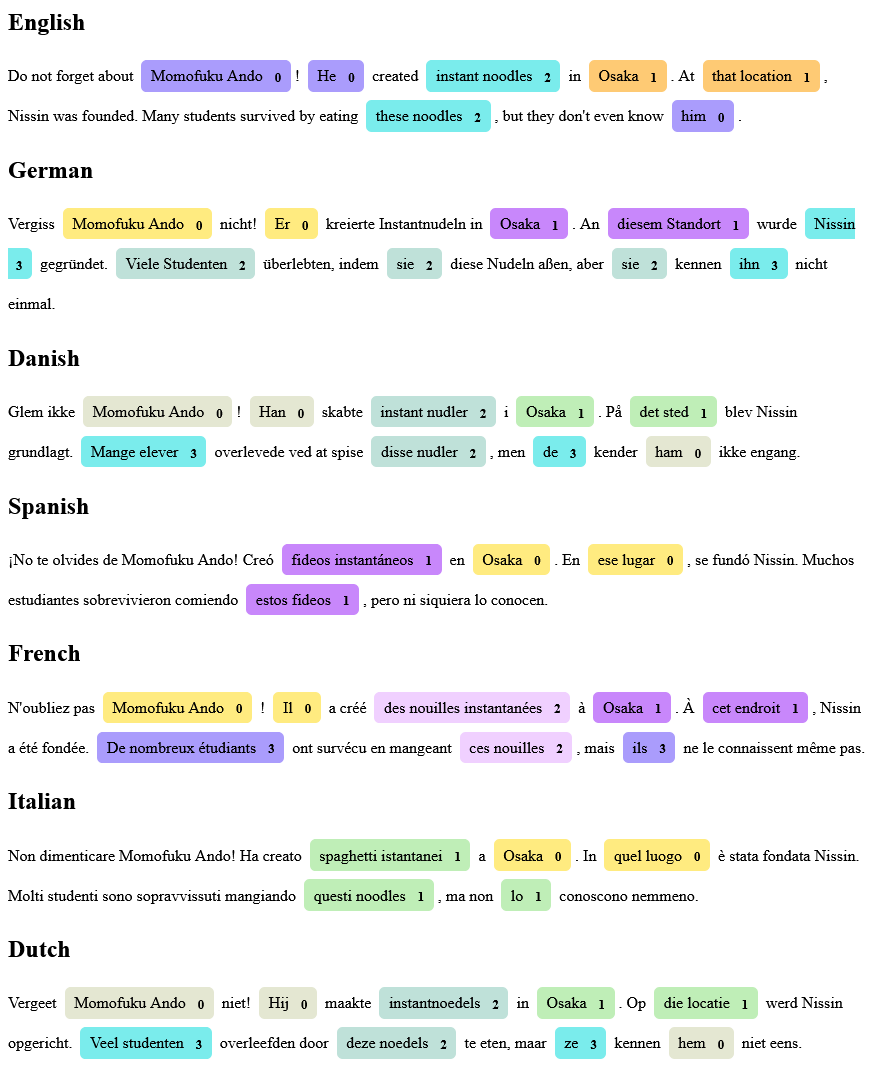

更多示例

项目详情

下载文件

下载适用于您平台的文件。如果您不确定选择哪个,请了解更多关于安装包的信息。

源分布

crosslingual-coreference-0.3.1.tar.gz (11.7 kB 查看散列)

构建分布

关闭

crosslingual-coreference-0.3.1.tar.gz的散列

| 算法 | 散列摘要 | |

|---|---|---|

| SHA256 | cbd46de0afedf75d3315c39e9fecb851112e29cb7d8b3d85fdb7eb39ac63c25e |

|

| MD5 | 8f91bf2ff7e8c471dbda8972ce098147 |

|

| BLAKE2b-256 | 81a07dca701ec4ad2eef0df1de5d5952dbae2c4c86ade79fb9a4e23bd36dd1d4 |

关闭

crosslingual_coreference-0.3.1-py3-none-any.whl的散列

| 算法 | 散列摘要 | |

|---|---|---|

| SHA256 | bd44ec22b2a1a02eb03203d04c4c92d819e2ac929baa2b825f21de7106beeedd |

|

| MD5 | a2de1f451d34036e0d1286c88bda50db |

|

| BLAKE2b-256 | 2b8de4ad53fd3a0f805658a140bd8e0affee4436a05831141660dc2a4103fcda |